Llama 3.1 405B Chat with Web Search

Try Reflection 70B Chat – Based On Llama 3.1

Language Supporting

English: My native tongue!

Spanish: ¡Hola! I be conversin’ in Español, too!

French: Oui, je parle Français, matey!

German: Aye, ich spreche Deutsch, too!

Italian: Ciao! I be chattin’ in Italiano, matey!

Portuguese: Olá! I be speakin’ Português, too!

Dutch: Hallo! I be conversin’ in Nederlands, matey!

Russian: Привет! I be speakin’ Русский, too!

Chinese: (Simplified) and (Traditional) – Aye, I be speakin’ Mandarin, too!

Japanese: Konnichiwa! I be conversin’ in, too!

Korean: – Aye, I be speakin’ Hangul, too!

Arabic: مرحبا! I be conversin’ in العربية, too!

Hebrew: שלום! I be speakin’ עברית, too!

* Depending on your internet speed, loading the model online may take a few seconds.

Online Llama 3.1 Chat | Instruct Mode

Frequently Asked Questions for Llama 3.1

1. What is Meta Llama 3.1 405B?

Meta Llama 3.1 is Meta’s latest language model, boasting 405 billion parameters. It offers advanced capabilities in natural language processing, including text generation, language translation, and conversation systems.

The easiest way to use Llama 3.1 is Llama AI Online

2. How can I access Meta Llama 3.1?

You can access Meta Llama 3.1 and its resources through the official website llama.meta.com and explore the comprehensive model card and usage instructions on Meta’s GitHub repository.

3. What makes Meta Llama 3.1 different from previous versions?

Meta Llama 3.1 features 405 billion parameters, making it one of the most powerful language models available. It offers improved accuracy and efficiency in natural language understanding and generation.

4. What are the main applications of Meta Llama 3.1 405B?

Meta Llama 3.1 is designed for various applications, including text generation, language translation, and conversation systems, making it a versatile tool for developers and researchers.

5. How does Meta Llama 3.1 improve natural language processing tasks?

With its extensive parameters and advanced architecture, Meta Llama 3.1 provides more accurate and contextually relevant outputs, enhancing the performance of natural language processing tasks.

6. Where can I find the model card for Meta Llama 3.1?

The model card for Meta Llama 3.1 can be found on Meta’s official GitHub repository. It includes detailed information about the model’s capabilities, usage guidelines, and technical specifications.

7. Is Meta Llama 3.1 available for open-source use?

Yes, Meta Llama 3.1 is available for open-source use. Meta provides comprehensive resources and documentation to help developers integrate and utilize the model effectively.

8. How can I use Meta Llama 3.1 for online chat applications?

Meta Llama 3.1 can be integrated into online chat applications for enhanced conversational abilities. You can leverage its advanced natural language understanding to create more interactive and responsive chatbots.

9. What resources are available for learning how to use Meta Llama 3.1?

Meta offers extensive resources, including a detailed model card, usage instructions, and examples on their GitHub repository. Additionally, the best tool to explore Meta Llama 3.1 is through https://llamaai.online/.

10. Can Meta Llama 3.1 be used for language translation tasks?

Yes, Meta Llama 3.1 is highly effective for language translation tasks. Its advanced natural language processing capabilities ensure accurate and contextually appropriate translations across different languages.

Online Llama 3.1 405B Chat: An In-depth Guide

Meta Llama 3.1 is Meta’s latest language model, boasting 405 billion parameters. It offers advanced capabilities in natural language processing, including text generation, language translation, and conversation systems.

Table of Contents

- What is Llama 3.1 405B?

- Importance of Llama 3.1 405B to Meta AI

- Benefits of Using Online Llama 3.1 405B Chat

- Suitable Scenarios for Using Online Llama 3.1 405B Chat

- Who Can Use Online Llama 3.1 405B Chat

- Alternatives to Llama 3.1 405B Models and Pros & Cons

What is Llama 3.1 405B?

Llama 3.1 405B is the latest iteration of Meta AI’s Llama series, boasting significant advancements in natural language processing and understanding. This model features 405 billion parameters, making it one of the most powerful AI models to date. Its primary applications include language translation, conversational AI, and advanced text analysis.

Importance of Llama 3.1 405B to Meta AI

The Llama 3.1 405B model is a cornerstone of Meta AI’s strategy to push the boundaries of AI capabilities. Its vast parameter set allows for more nuanced and accurate language processing, which is crucial for developing next-generation AI applications. This model supports a wide range of tasks, from simple chatbots to complex data analysis tools, underscoring Meta AI’s commitment to innovation.

Benefits of Using Online Llama 3.1 405B Chat

Enhanced Performance

The online Llama 3.1 405B chat offers unmatched performance in terms of response accuracy and speed. Users benefit from a highly responsive and intelligent conversational partner, capable of understanding and generating human-like text.

Accessibility

By providing an online interface, Meta AI ensures that users can access the powerful features of Llama 3.1 405B without the need for extensive hardware or technical expertise. This democratizes access to advanced AI capabilities.

Versatility

The online chat platform can be used across various industries, including customer service, education, and content creation. Its ability to understand and generate contextually relevant responses makes it a valuable tool for professionals and enthusiasts alike.

Suitable Scenarios for Using Online Llama 3.1 405B Chat

Customer Support

Businesses can leverage the online Llama 3.1 405B chat for efficient and effective customer support, handling a large volume of queries simultaneously while providing accurate responses.

Educational Tools

Educators and students can use this AI for learning purposes, including language practice, information retrieval, and interactive tutoring sessions.

Content Creation

Writers and marketers can utilize the AI to generate ideas, draft content, and even edit and improve existing texts, streamlining the content creation process.

Who Can Use Online Llama 3.1 405B Chat

The online Llama 3.1 405B chat is designed for a wide range of users, including:

- Businesses: For improving customer interaction and support services.

- Educators and Students: As a learning aid and information resource.

- Content Creators: To enhance productivity and creativity in content generation.

- Researchers: For conducting advanced text analysis and language-related studies.

Alternatives to Llama 3.1 405B Models and Pros & Cons

| Model | Pros | Cons |

|---|---|---|

| GPT-4 | Highly advanced, extensive training data | Requires significant computational resources |

| BERT | Excellent for understanding context in text | Not as strong in text generation |

| T5 | Versatile and powerful in both understanding and generation | Can be slower due to its complexity |

| RoBERTa | Improved robustness and performance over BERT | Limited to specific tasks, less versatile |

Llama 3.1 Model Specifications Overview

The Llama 3.1 Model Specifications Overview provides a detailed breakdown of the key technical specifications for various Llama 3.1 models, including the 8B, 70B, and 405B versions. This table highlights crucial aspects such as training data, parameter size, input and output modalities, context length, and token count, all of which are pivotal in understanding the performance metrics and AI capabilities of these models. For users seeking insights into Llama models and their applications in AI development, this overview serves as an essential reference. Whether you’re exploring usage scenarios or delving into the technical intricacies of online Llama 3.1 405B Chat, this table provides the foundational data needed to grasp the scale and scope of Meta AI’s advancements in model specifications.

| Model | Training Data | Params | Input Modalities | Output Modalities | Context Length | GQA | Token Count | Knowledge Cutoff |

|---|---|---|---|---|---|---|---|---|

| 8B | A new mix of publicly available online data. | 8B | Multilingual Text | Multilingual Text and code | 128k | Yes | 15T+ | December 2023 |

| 70B | A new mix of publicly available online data. | 70B | Multilingual Text | Multilingual Text and code | 128k | Yes | 15T+ | December 2023 |

| 405B | A new mix of publicly available online data. | 405B | Multilingual Text | Multilingual Text and code | 128k | Yes | 15T+ | December 2023 |

Environmental Impact and Resource Usage of Llama 3.1 Models

The Environmental Impact and Resource Usage of Llama 3.1 Models table offers a comprehensive look at the training time, power consumption, and greenhouse gas emissions associated with different Llama 3.1 models, including the 8B, 70B, and 405B versions. This analysis is crucial for understanding the environmental footprint of AI development processes, particularly for models with extensive technical specifications and resource requirements. For those interested in the broader implications of deploying advanced AI capabilities like the online Llama 3.1 405B Chat, this table sheds light on the performance metrics related to sustainability. The data presented not only underscores the substantial resource needs of cutting-edge Llama models but also highlights the importance of considering environmental factors in user guides and development practices.

| Model | Training Time (GPU hours) | Training Power Consumption (W) | Location-Based Greenhouse Gas Emissions (tons CO2eq) | Market-Based Greenhouse Gas Emissions (tons CO2eq) |

|---|---|---|---|---|

| Llama 3.1 8B | 1.46M | 700 | 420 | 0 |

| Llama 3.1 70B | 7.0M | 700 | 2,040 | 0 |

| Llama 3.1 405B | 30.84M | 700 | 8,930 | 0 |

| Total | 39.3M | 11,390 | 0 |

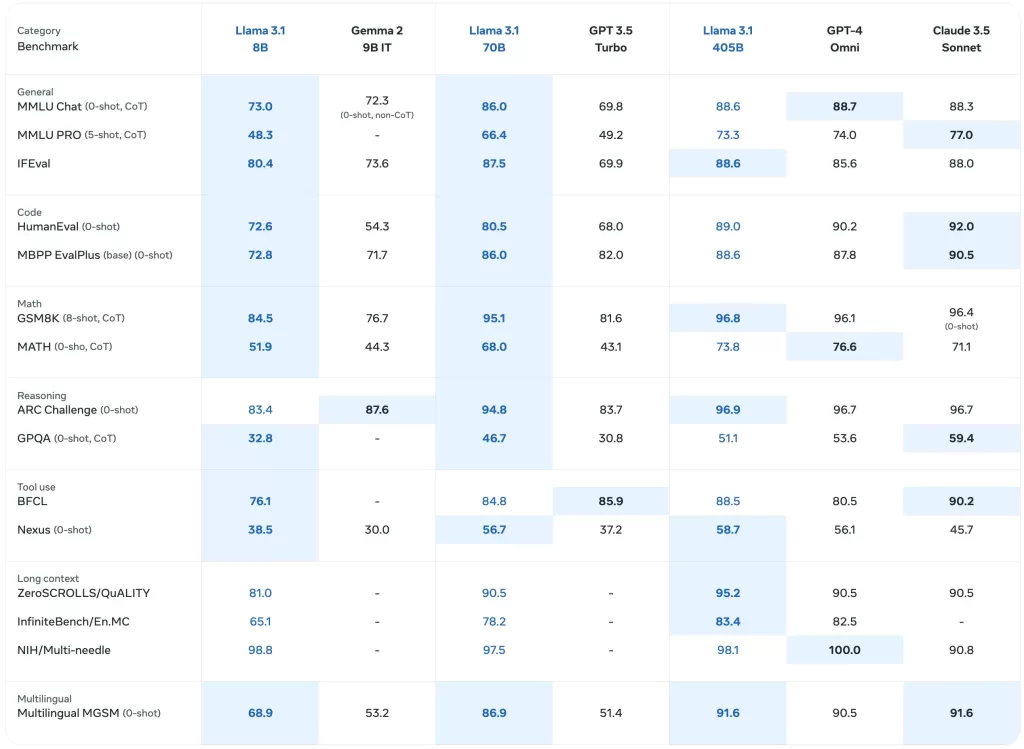

Benchmark Performance of Llama 3.1 Models

The Benchmark Performance of Llama 3.1 Models table provides a detailed evaluation of various Llama models, including the latest Llama 3.1 405B, across a range of performance metrics. This comprehensive analysis includes benchmarks for general tasks, knowledge reasoning, reading comprehension, and more, offering insights into the AI capabilities of these models. For those interested in AI development and the real-world applications of Llama models, this table highlights the technical specifications and effectiveness of each model. It serves as a valuable resource for understanding the comparative performance of the online Llama 3.1 405B Chat and its predecessors in various usage scenarios.

Base Pretrained Models

The Base Pretrained Models table presents a comparative overview of the Llama 3 and Llama 3.1 models across several benchmarks and metrics. This section includes data on general performance, knowledge reasoning, and reading comprehension, reflecting the models’ technical specifications and AI capabilities. With detailed results for each model, including the Llama 3.1 405B, this table is essential for assessing the initial performance of these models in a variety of contexts. For users exploring online Llama 3.1 405B Chat and its effectiveness, this table offers valuable insights into the foundational benchmarks that underpin these Llama models.

| Category | Benchmark | # Shots | Metric | Llama 3 8B | Llama 3.1 8B | Llama 3 70B | Llama 3.1 70B | Llama 3.1 405B |

|---|---|---|---|---|---|---|---|---|

| General | MMLU | 5 | macro_avg/acc_char | 66.7 | 66.7 | 79.5 | 79.3 | 85.2 |

| MMLU-Pro (CoT) | 5 | macro_avg/acc_char | 36.2 | 37.1 | 55.0 | 53.8 | 61.6 | |

| AGIEval English | 3-5 | average/acc_char | 47.1 | 47.8 | 63.0 | 64.6 | 71.6 | |

| CommonSenseQA | 7 | acc_char | 72.6 | 75.0 | 83.8 | 84.1 | 85.8 | |

| Winogrande | 5 | acc_char | – | 60.5 | – | 83.3 | 86.7 | |

| BIG-Bench Hard (CoT) | 3 | average/em | 61.1 | 64.2 | 81.3 | 81.6 | 85.9 | |

| ARC-Challenge | 25 | acc_char | 79.4 | 79.7 | 93.1 | 92.9 | 96.1 | |

| Knowledge Reasoning | TriviaQA-Wiki | 5 | em | 78.5 | 77.6 | 89.7 | 89.8 | 91.8 |

| Reading Comprehension | SQuAD | 1 | em | 76.4 | 77.0 | 85.6 | 81.8 | 89.3 |

| QuAC (F1) | 1 | f1 | 44.4 | 44.9 | 51.1 | 51.1 | 53.6 | |

| BoolQ | 0 | acc_char | 75.7 | 75.0 | 79.0 | 79.4 | 80.0 | |

| DROP (F1) | 3 | f1 | 58.4 | 59.5 | 79.7 | 79.6 | 84.8 |

Instruction Tuned Models

The Instruction Tuned Models table provides a focused look at how Llama 3.1 models, particularly the Llama 3.1 405B, perform when fine-tuned for specific tasks. This section includes performance metrics for instruction-following tasks, code evaluation, and reasoning, highlighting the enhanced AI capabilities achieved through instruction tuning. It is a critical resource for understanding the model specifications that drive the online Llama 3.1 405B Chat‘s ability to handle complex queries and tasks. This table is invaluable for those developing applications or creating user guides that leverage the Llama 3.1 models’ advanced capabilities.

| Category | Benchmark | # Shots | Metric | Llama 3 8B Instruct | Llama 3.1 8B Instruct | Llama 3 70B Instruct | Llama 3.1 70B Instruct | Llama 3.1 405B Instruct |

|---|---|---|---|---|---|---|---|---|

| General | MMLU | 5 | macro_avg/acc | 68.5 | 69.4 | 82.0 | 83.6 | 87.3 |

| MMLU (CoT) | 0 | macro_avg/acc | 65.3 | 73.0 | 80.9 | 86.0 | 88.6 | |

| MMLU-Pro (CoT) | 5 | micro_avg/acc_char | 45.5 | 48.3 | 63.4 | 66.4 | 73.3 | |

| IFEval | – | – | 76.8 | 80.4 | 82.9 | 87.5 | 88.6 | |

| Reasoning | ARC-C | 0 | acc | 82.4 | 83.4 | 94.4 | 94.8 | 96.9 |

| GPQA | 0 | em | 34.6 | 30.4 | 39.5 | 41.7 | 50.7 | |

| Code | HumanEval | 0 | pass@1 | 60.4 | 72.6 | 81.7 | 80.5 | 89.0 |

| MBPP ++ base version | 0 | pass@1 | 70.6 | 72.8 | 82.5 | 86.0 | 88.6 | |

| Multipl-E HumanEval | 0 | pass@1 | – | 50.8 | – | 65.5 | 75.2 | |

| Multipl-E MBPP | 0 | pass@1 | – | 52.4 | – | 62.0 | 65.7 | |

| Math | GSM-8K (CoT) | 8 | em_maj1@1 | 80.6 | 84.5 | 93.0 | 95.1 | 96.8 |

| MATH (CoT) | 0 | final_em | 29.1 | 51.9 | 51.0 | 68.0 | 73.8 | |

| Tool Use | API-Bank | 0 | acc | 48.3 | 82.6 | 85.1 | 90.0 | 92.0 |

| BFCL | 0 | acc | 60.3 | 76.1 | 83.0 | 84.8 | 88.5 | |

| Gorilla Benchmark API Bench | 0 | acc | 1.7 | 8.2 | 14.7 | 29.7 | 35.3 | |

| Nexus (0-shot) | 0 | macro_avg/acc | 18.1 | 38.5 | 47.8 | 56.7 | 58.7 | |

| Multilingual | Multilingual MGSM (CoT) | 0 | em | – | 68.9 | – | 86.9 | 91.6 |

Multilingual Benchmarks

The Multilingual Benchmarks table showcases the performance of Llama 3.1 models in various languages, including Portuguese, Spanish, Italian, German, French, Hindi, and Thai. This section illustrates the models’ ability to handle multilingual input and provides performance metrics specific to each language. For those interested in deploying the online Llama 3.1 405B Chat in diverse linguistic contexts, this table highlights the AI capabilities and technical specifications that make these models versatile across different languages. It is a key resource for understanding how well the Llama models perform in global usage scenarios and for crafting effective user guides.

| Category | Benchmark | Language | Llama 3.1 8B | Llama 3.1 70B | Llama 3.1 405B |

|---|---|---|---|---|---|

| General | MMLU (5-shot, macro_avg/acc) | Portuguese | 62.12 | 80.13 | 84.95 |

| Spanish | 62.45 | 80.05 | 85.08 | ||

| Italian | 61.63 | 80.4 | 85.04 | ||

| German | 60.59 | 79.27 | 84.36 | ||

| French | 62.34 | 79.82 | 84.66 | ||

| Hindi | 50.88 | 74.52 | 80.31 | ||

| Thai | 50.32 | 72.95 | 78.21 |

Model evaluations with Benchmarks